Screen Space Planar Reflections in Ghost Recon Wildlands

Reflections done in screen-space

The screen space reflections algorithm (SSR) is a technology now used in numerous video games to provide the reflection visuals. They are expected to be less expensive than actual reflection rendering and more accurate than cubemap-based reflections, as long as the reflection source is present on the screen. Still, this technology isn’t that affordable in a realtime application and it suffers from a lot of graphic caveats: SSR isn’t the final solution to the reflection inputs. However, on specific – controlled – situations, these flaws can be alleviated and SSR can deliver astonishing results.

Motivation

Here we’re going to talk about another form of SSR that has been developed to support the water rendering ambitions of Ghost Recon Wildlands. It aims to provide multiple high-quality reflection surfaces from close to far distances with little performance impact, for the specific case of planar reflectors.

Projection

Hash resolve

Filling the gaps

Optimizations

Multiple water planes

A complete example

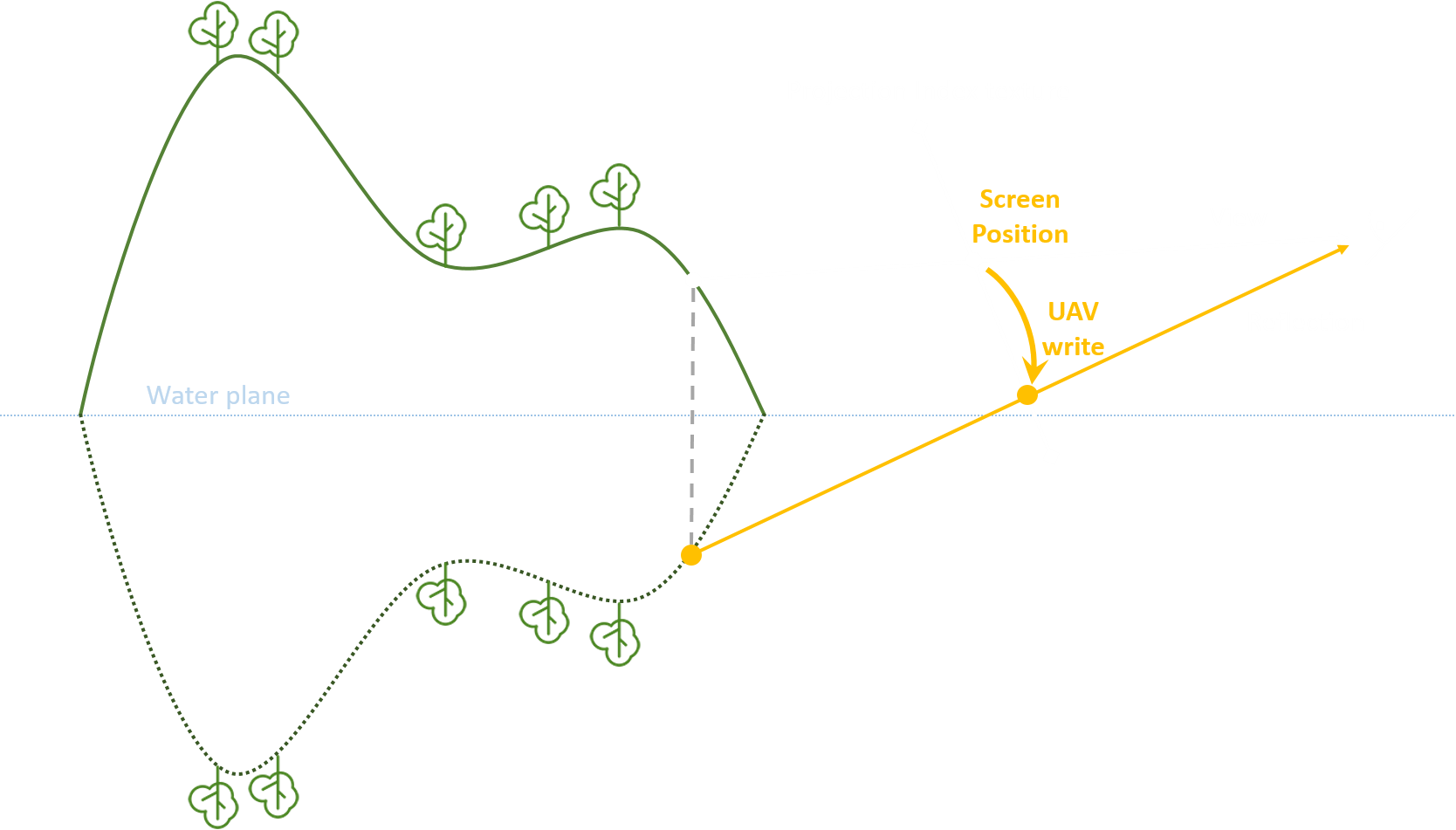

Projection

The concept on which the Screen Space Planar Reflections (SSPR) is based on is the Projection Hash Buffer. This screen-space texture will hold information about the location where the pixels from the main view should be projected in the reflection view.

To this end, for every pixel in the main depth buffer:

- The pixel is reprojected in world space.

- This world position is reflected with a known water plane and projected in screen space.

- We write the screen location of the source pixel at the reflected screen position in the projection hash buffer via an UAV write.

In practice, we encode the projected pixel location on a single R32_UINT following the simple hash formula

uint ProjectionHash = PixelY << 16 | PixelX

(why this ordering ? Wait for the next section)

float WaterHeight = …

float4 PS_ProjectHash(float2 ScreenUV) : SV_Target0

{

float3 PosWS = Unproject(ScreenUV, MainDepthBuffer);

float3 ReflPosWS = float3(PosWS.xy, 2 * WaterHeight – PosWS.z);

float2 ReflPosUV = Project(ReflPosWS);

uint2 SrcPosPixel = ScreenUV * FrameSize;

uint2 ReflPosPixel = ReflPosUV * FrameSize;

ProjectionHashUAV[ReflPosPixel] = SrcPosPixel.y << 16 | SrcPosPixel.x;

return 0; // Dummy output

}

Here, ReflPosPixel is the pixel-space location of the projected pixels in the reflection.

We use it to store in the projection hash buffer where the source pixel SrcPosPixel lies in the main view.

Once it’s encoded in the hash texture it’s ready to be used for the second pass.

Hash resolve

The idea is quite simple: a fullscreen quad is going to:

- Fetch the projection hash texture.

- Decode the hashes to retrieve the location of the source color pixels.

- Fetch the source pixels and output them in the final reflection target.

float4 PS_ResolveHash(float2 ScreenUV) : SV_Target0

{

uint Hash = ProjectionHashTex[ScreenUV * FrameSize].x;

uint x = Hash & 0xFFFF;

uint y = Hash >> 16;

if(Hash != 0)

{

float4 SrcColor = MainColorTex[uint2(x, y)];

return SrcColor;

}

else

return 0;

}

That was pretty straightforward, but at this point the results can be disappointing:

Concurrent reflective candidates resulting in blinking pixels

Concurrent reflective candidates resulting in blinking pixels

There are two flagrant issues here:

- The projection of the source positions into the reflection is an injective transformation, which means that two different pixels from the main view can be merged in the reflection and become “reflective concurrents” without knowing which one of them should prevail. Hence the blinking pixels.

- There are gaps in the reflection caused by the occlusion in the main view that prevents valid pixels in the reflection to be projected.

That’s where the Projection hash texture and the hashing function we chose eventually make sense.

By using the intrinsic InterlockedMax when writing on the UAV, two hashes are going to be sorted first by their high bytes and so by their PixelY value.

// Read-write max when accessing the projection hash UAV

uint projectionHash = SrcPosPixel.y << 16 | SrcPosPixel.x;

InterlockedMax(ProjectionHashUAV[ReflPosPixel], projectionHash, dontCare);

The concurrent projection is now sorted “from-bottom-to-top” and the source pixel locations stored in the projection hash are now the ones closest to the water plane thus the closest to the camera in the reflection view. The projection is now stable.

Filling the gaps

The missing geometry is the #1 issue with the SSR approach and we need to find a way to fill it or else the reflection effect would be disastrously broken.

First we’ll deal with the missing reflection on the screen borders. This is due to geometry absent from the main view but needed in the reflection. There’s no actual solution besides rendering a bigger out-of-screen main frame so we’d be able to fetch it to fill the borders. But in a real world game where every microsecond counts, this is not a option.

Instead we’ll add some stretch on the projected location based on the distance between the source pixel and the water plane.

float HeightStretch = (PosWS.z – WaterHeight);

float AngleStretch = saturate(- CameraDirection.z);

float ScreenStretch = saturate(abs(ReflPosUV.x * 2 - 1) – Threshold);

ReflPosUV.x *= 1 + HeightStretch * AngleStretch * ScreenStretch * Intensity;

Reflection stretching to fill the missing pixels on the borders

Reflection stretching to fill the missing pixels on the borders

Then, it is time to deal with the holes in the projection, which were created by the geometry occluded by closer pixels, and which couldn’t have been projected.

- A classic temporal reprojection helps a lot and very little movement actually suffices to almost completely fill the cracks.

- As a fallback for pixels that still couldn’t be filled with relevant information but which could still be valid (i.e not in the sky), we’ll just make the reflection surface we generated the frame before “bleed” on the current one. It surely isn’t correct but it gracefully avoids any discontinuity and fills the remnant gaps with a coherent color/luminosity.

The reprojection and bleeding fill the gaps with coherent values

The reprojection and bleeding fill the gaps with coherent values

Optimizations

We have a nice real-time solution at this point but let’s try to get some extra bits of performance. We are going to use an empty additional stencil and see how it can drastically change the cost of the SSPR.

- We discard the pixels whose height is below the water plane as they have no chance to participate in the reflection. The successful pixels are marked in the additional stencil.

- The hash resolve pass uses this mask to only resolve the reflection on the pixels which have been previously discarded (thus whose stencil value has not been marked), as they are the only ones able to be reflective.

With these optimizations, the SSPR cost is linearly dependant on the percentage of the screen where pixels aren’t the sky and are located below the water plane.

On Ghost Recon Wildlands, we were then able to generate any water reflection surface for a cost of 0.3~0.4 ms on consoles at 1/4 resolution.

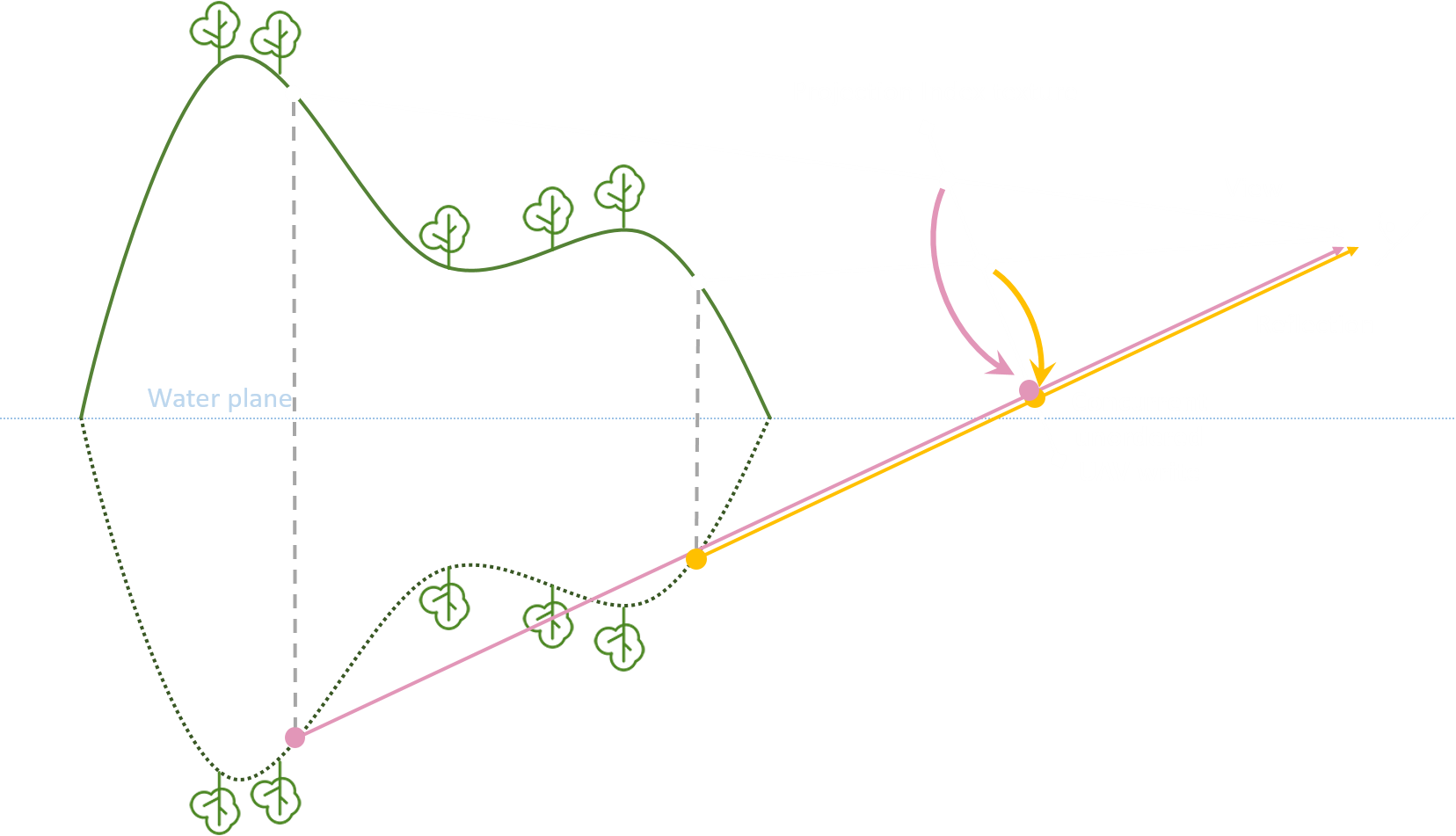

Multiple water planes

As we achieved our goal of an affordable reflection technology, we’re able to compute it several times in a single frame, allowing us to handle multiple water surfaces.

To achieve this, we’ll have to know which planes need the SSPR rendered.

- When rendering the water, each water pixel increments a counter with its plane ID

- These counters are then processed to know which planes are actually visible, and we keep the N planes which are the most present on the screen.

- We generate N reflection layers in a texture array using SSPR.

- In the next frame, the water will use its ID to fetch the array and retrieve the reflection.

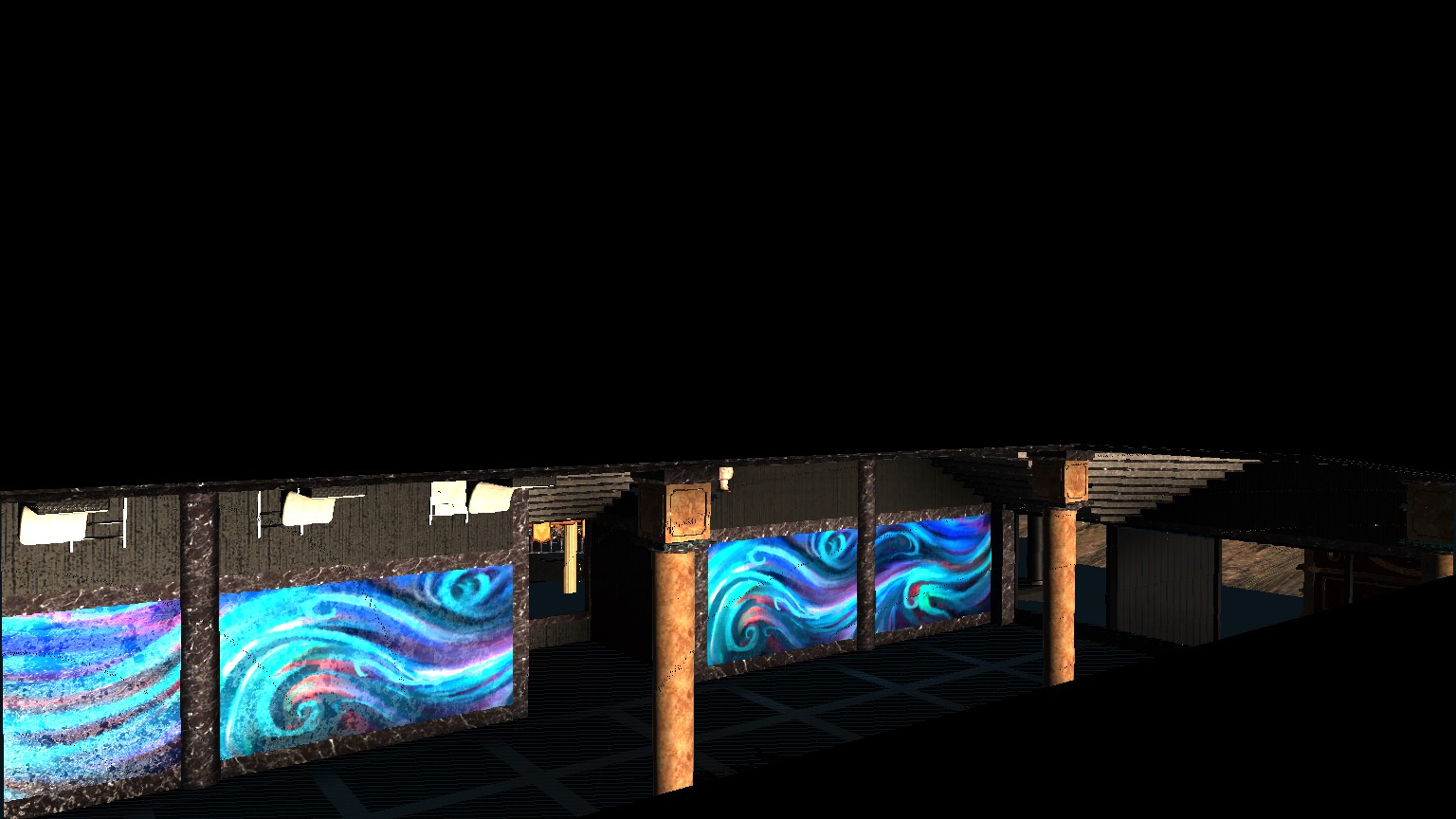

Multiplanar reflection on the pool and the lake surfaces

Multiplanar reflection on the pool and the lake surfaces

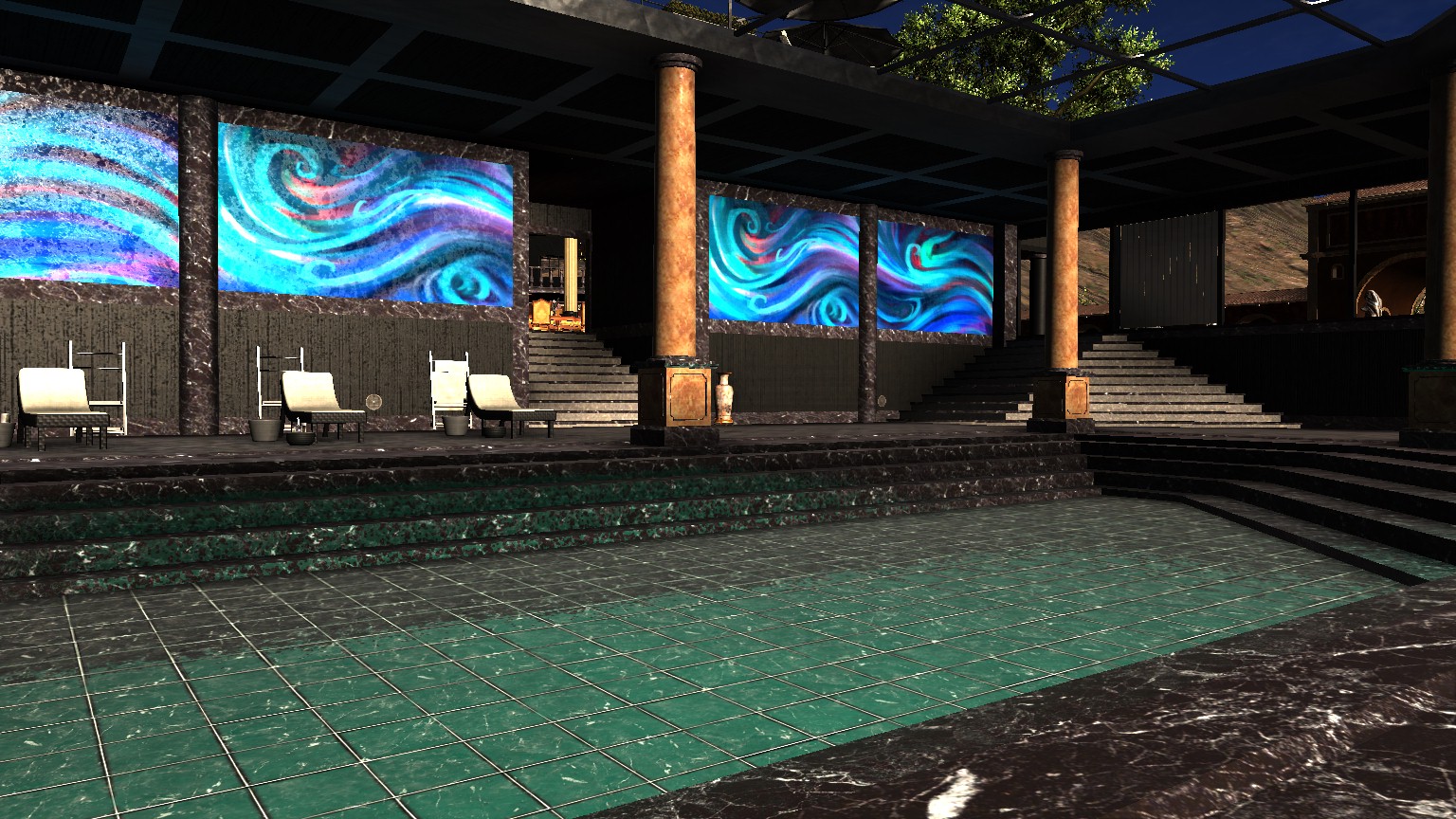

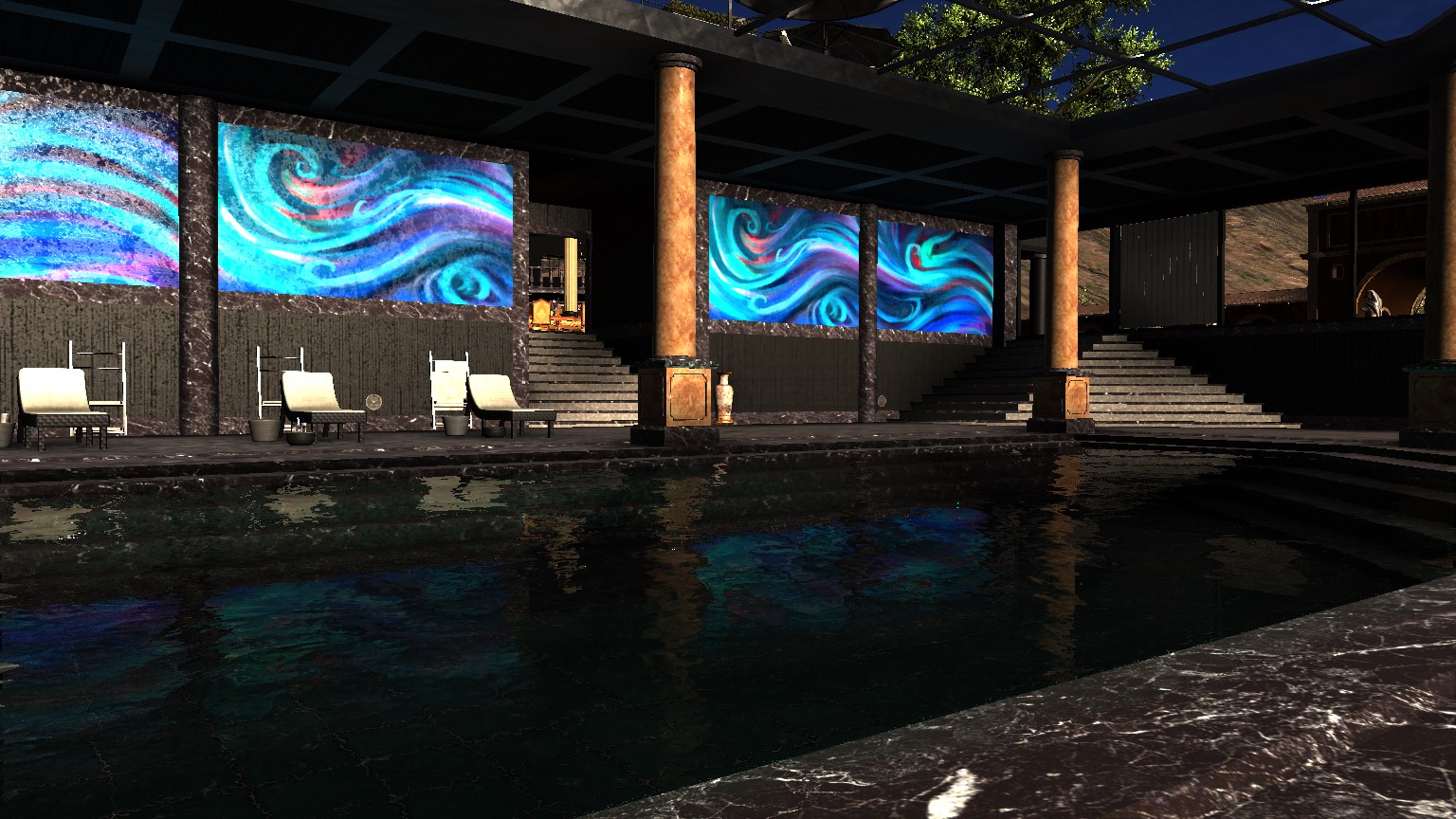

A complete example

Lit color buffer

Lit color buffer

| Stencil | SSPR projection hash |

|---|---|

|

|

Resolved hash

Resolved hash

Water rendering

Water rendering